I’m really excited to share an upcoming new tool with you guys today – I think this could be a game-changer for how we can interact with our information. Whether it’s your notes, a book, or a podcast transcript, this new tool can allow you to use an AI assistant to query it.

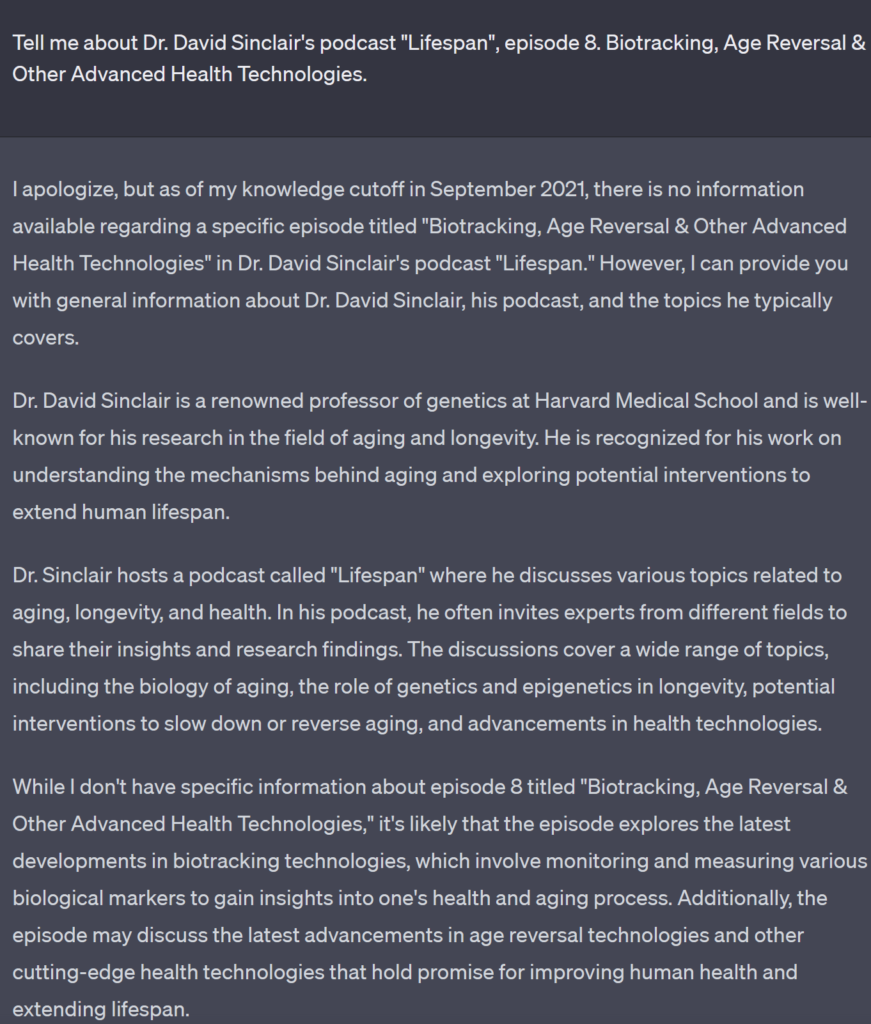

As many of you no doubt have noticed, AI has been moving in leaps and bounds this year. New tools have been coming out to write essays, generate transcripts, make art, and virtually anything you can think of. The big one that everyone’s probably heard about is a Large Language Model (LLM) called ChatGPT. Anyone who has used it can probably attest that it’s super cool, but has some unfortunate limitations – a few that have frustrated me most are its training cutoff date, its token limitations preventing you from asking it about anything long form, and the fact that it could sometimes provide confidently false answers, meaning I had to fact-check it.

Well, some friends and I got together to solve some of these problems, with a central goal of working on an AI assistant that could be reliably factual, show its sources, and work with data the user could select. What we came up with is called Pantheon. Pantheon lets you select your source documents and then use that data to help inform the AI assistant’s responses. This has several excellent advantages:

It helps ensure accuracy

“Hallucinations” are a common problem with Large Language Models, where they make up information – and state it with confidence. For example, asking an LLM like ChatGPT for a citation will often result in a real-looking but false or non-existent citation, or a real citation that doesn’t actually match the statement. Pantheon, on the other hand, provides the model (and the user) a citation from the source document, which is used to directly inform the answer. We really wanted to make is so that the user could always follow the AI’s conclusions back to their source material, especially if they were using it for something like academic research.

It allows you to feed large amounts of data to the model

A frustration many users have with ChatGPT is its token limit – sometimes we want to pass the model a lot of data, such as entire books or podcast transcripts. This is impossible with ChatGPT’s current setup, as pasting any more than a predefined limit of tokens will simply prompt the user to provide a shorter query. Pantheon’s solution to this problem is to make use of a database as the model’s “memory”, meaning you don’t need to paste your entire document into the query. This method stores your documents in a vectorized database first, and your query can therefore first search a potentially vast repository of source documentation to find the most relevant sources before passing this data to the LLM, which can parse it for you into a more cohesive summary.

It allows you to overcome ChatGPT’s training limitations

Any data past an LLM model’s training date are not accessible by the model, for example books, web pages, or podcasts published after 2021. And, of course, the LLM is normally not aware of your personal notes either. This method allows a user to upload new data and pass it to the model.

It allows you to choose your sources

Pantheon is designed so that users can create and share ”Documents”, which are used as source texts for the model, and “Projects”, which are collections of documents. The user can then ask Pantheon to only query specific documents or projects, rather than the vast repository of an LLM’s training material, which could include considerable amounts of data you don’t trust or care about. This allows you to ask targeted questions to a specific book, podcast, author, or genre of information. It also means you can tailor your collection to provide answers from sources you trust, such as academic papers.

Anyone who has conducted research is likely familiar with the experience of first collecting a bunch of sources, and then poring them over for relevant information. Or writing a paper, only to realize you forgot to write down the citation, so you need to go digging for it. Or, for those rare few who try to read the sources of articles or books they read, it can be a lot of work to track down whether the source actually says what the writer alleges! This is meant to help make all of that far less painful – by letting you query the data directly.

As a case study, I want to show you my workflow I’ve been using to feed Pantheon with information. Of course the first thing I did was fed it a bunch of books from Project Gutenberg, but because Pantheon accepts uploads of several filetypes such as PDF, .txt, .epub, and Markdown, you can feed it all sorts of diverse information. I use a browser extension called MarkDownload which can save webpages as a Markdown file. Here I visited a PodScript generated transcript from Dr. David Sinclair’s podcast “Lifespan”, and saved the transcript in Markdown format using the browser extension. Let’s start with asking ChatGPT.

This is a predictable result – we came up against GPT’s knowledge cutoff, and instead got a good but very general answer about David Sinclair and his podcast. Pasting the transcript into the window produces another predictable result:

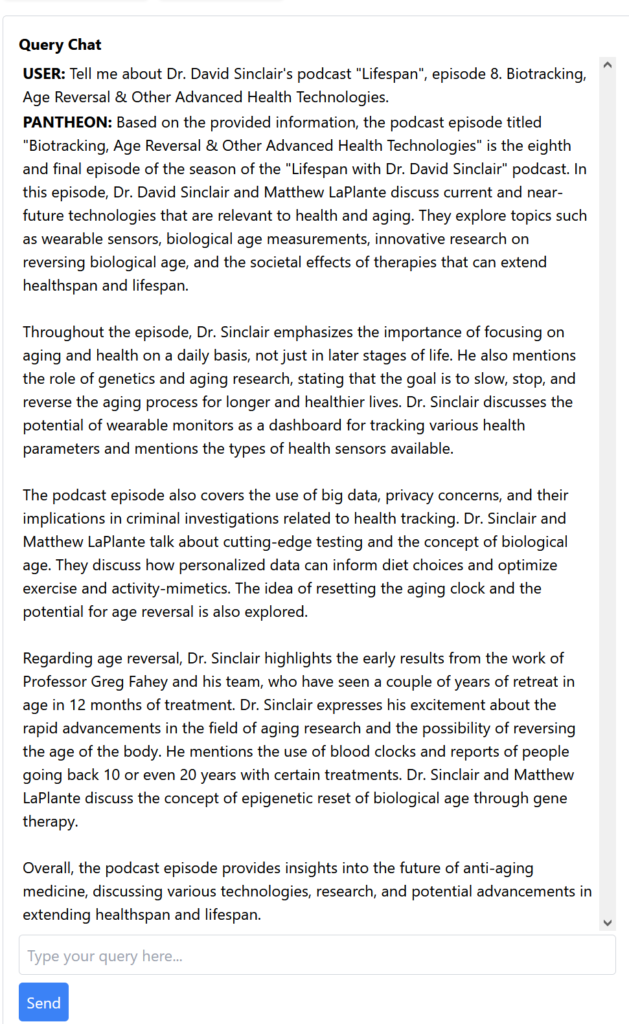

The only real solution to this problem is to break the podcast transcript into pieces and feed it piece by piece, but this is annoying and time consuming, and ChatGPT’s conversational memory is inconsistent, so it will often ‘forget’ what you told it. So next let’s upload the transcript into Pantheon, and ask it about the podcast. Note, we’re still working on a lot of these components, so they might look different on launch:

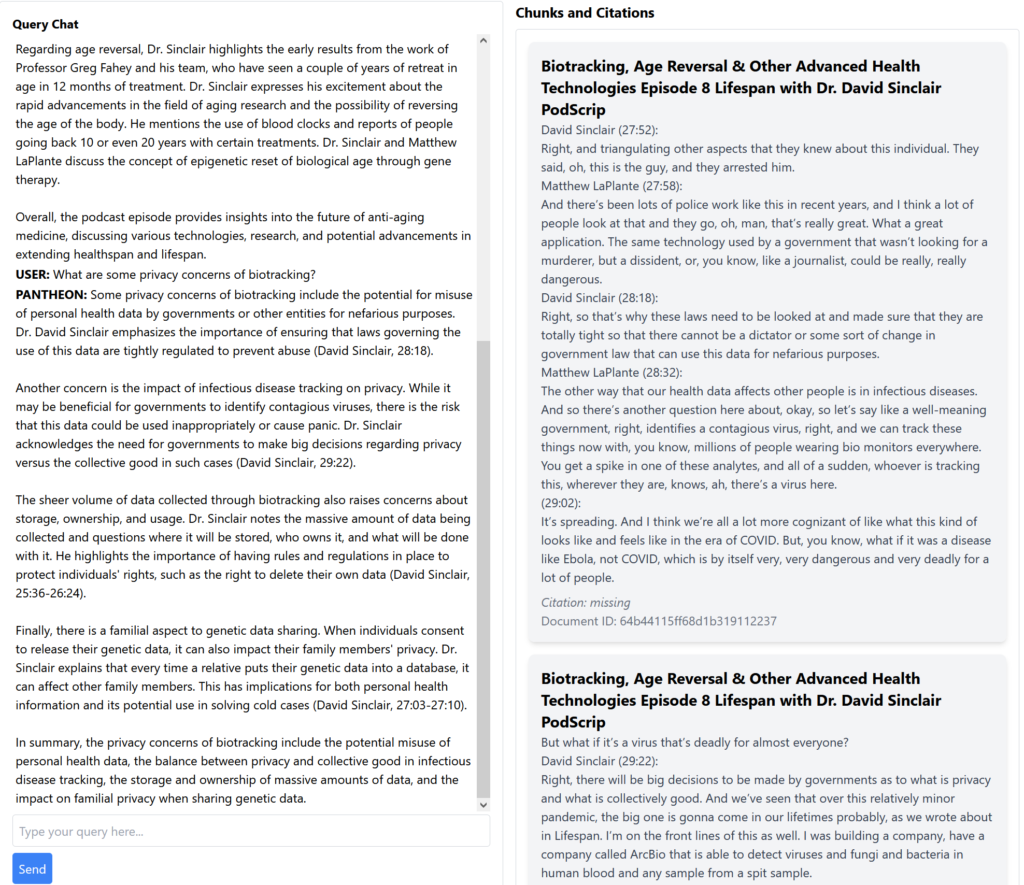

As you can see, now the model is recognizing my question and successfully querying the podcast – and just as importantly, it’s focusing on the content of the document! This means that you will get answers from the set of data you provided, instead of the often sanitized and sometimes hallucinated outputs of the larger model. You can also see in the citations section in the screenshot below exactly the parts of the transcript that were used to populate Pantheon’s answers, which means you can always check to make sure what the AI is telling you actually aligns to its sources! Our philosophy is essentially that we don’t want you to need to take anything the AI says purely on trust. Here is an expanded view with the “Citations” section, which displays the parts of the document the search has identified as relevant, which are what fed the model’s answer.

You could create a project that contained all of the transcripts for every Lifespan podcast, and Pantheon will be able to query the entire set for relevant answers to your questions. There are countless applications for this sort of thing – some I’ve tried are feeding it laws I wanted to understand better, medical studies, and books I want to brush up on. The tool is allowing you to essentially talk to documents! Remember, though – garbage in, garbage out. If you give Pantheon a bad diet of really low quality or poorly formatted information, your responses will be terrible, too. An important thing to remember is that AI such as this won’t intrinsically tell you true information, it will report to you what it’s been told. You need to make sure you trust your sources.

Stay tuned – my next article will discuss another feature I’m really excited about, called “Personas”. Personas allow the user to add additional context over their query which informs the assistant’s response – such as personality, or certain rules for how the response should be formatted. For example, you may prompt your AI assistant to be more skeptical and question the source material, or respond with more optimism.

If you want to try out Pantheon, we’ll soon be launching it with a selection of pre-made projects using material such as Project Gutenberg’s vast repository of public domain knowledge, as well as some transcripts of some of our favorite podcasts, like Huberman Lab. Stay tuned! We’ve just launched a Twitter, so if you want to stay up to date, follow us at @Pantheon_AI.